MPI-1

- Fixed process model

- point-to-point communications

- collective operations.

- communicators for safe library writing

- utility routines

MPI-2

- Dynamic process management.

- one-sided communications

- cooperative I/O

Dynamic Process Management

- MPI - 1 had a static or fixed number of processes

- Cannot add nor delete processes

- The cost of having idle processes may be large.

- Some applications favour dynamic spawning

- run-time assessment of environment

- serial applications with parallel modules

- scavenger applications.

CAUTION: process initiation is expensive, hence requires careful thought.

MPI-2 Process Management

- Parents spawn children

- Existing MPI applications can connect

- Formerly independent sub-applications can tear down communications and become independent.

Task Spawning - MPI_spawn();

- It is a collective operation over the parent process’ communicator.

- info parameter: how to start the new processes (host, architecture, work dir, path).

- intercomm and errcodes are returned values.

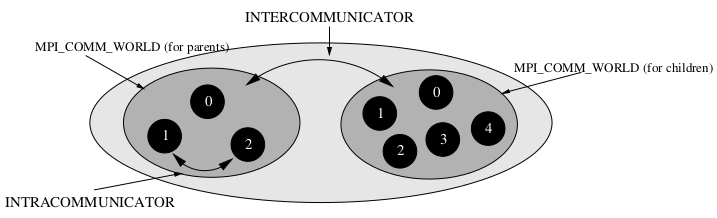

Communicators

- MPI processes are identified by (group, rank) pairs.

- Communicators are either infra group, or

- inter group : ranks refer to processes in the remote group.

- Processes in the parent and childern group each have thier own MPI_COMM_WORLD

- Send and Recv have a destination and a inter/infra communicator.

- Possible to merge processes or free parents from children by MPI_Intercomm_merge() and MPI_Comm_free().

One-sided Communications

For traditional MP, there is an implicit synchronisation - it may be delayed by asynchronous message passing.

In One-sided Communications,

- One process specifies all communication parameters.

- Data transfer and synchronisation are separate.

- Typical operations are put, get, accumulate. MPI_put()

MPI-2 Remote Memory Access (RMA)

- Processes assign a portion (or window) of their address space that explicitly expose to RMA operations. MPI_Win()

- Two types of targets

- Active target RMA: requires all processors that created the window to call MPI_Win_fence() before any RMA operations is guaranteed to complete.

- One-sided communication: no process is req to post a recv.

- Cooperative in that all processes must synchronize before any of them are guaranteed to have got/put data.

- Passive target RMA: only requirment is that originating process places MPI_Win_Lock() and MPI_Win_Unlock() before and after the data transfer.

- Transfer is guranteed to have completed on return from unlock.

- Known as one-sided communication.

- Active target RMA: requires all processors that created the window to call MPI_Win_fence() before any RMA operations is guaranteed to complete.

- Potential unsync can be avoided by locks or mutexes.

MPI-2 File Operations

- Positioning: explicit offset, shared pointer/ individual pointers

- Synch: blocking/non-blocking (async)

- Coordination: collective / non-collective

- File types:

- is a datatype made up of elementary types; MPI_Int()

- allows to specify non-contiguous accesses.

- can be tiled. s.t. process writes to block of the file.

MPI-IO Usage

- 각 프로세서는 자신의 데이터를 따로 파일에 쓸수 있다

- 프로세서들은 하나의 파일로 데이터를 추가 할 수 있다. (로그파일 형식) - 한 파일 포인터 사용

- 프로세서들은 협력해서 하나의 큰 매트릭스를 파일에 쓸수 있다

- 파일을 타일 하기 위한 파일타입 생성

- 각가의 포인터를 이용

- 모음 명령어를 이용하여 데이터 셔플링 가능케 함

- 병렬 파일 시스템이 사용될수 있지만 그냥 평범한 파일 시스템으로 보일 수 있음

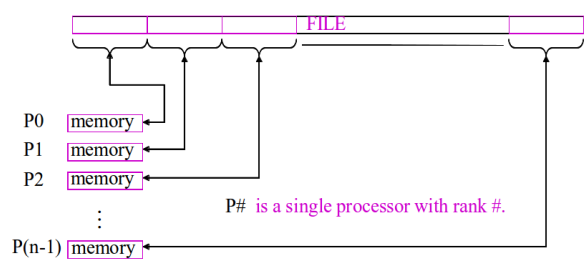

Simple MPI I/O

각 개인 포인터를 이용한 협력 파일 명령어. 각각의 프로세서의 메모리가 할당된 파일 타일에 데이터를 넣는 형식

- MPI_File_Open() 을 이용하여 커뮤니케이터와, 각 개인 포인터와 공유 파일 포인터를 생성함

- Info parameter 는 성능 조절, 특이 케이스 핸들링등에 대해 파일에 공유

- 각각의 읽기 쓰기는 포지셔닝을 필요로 하는데 이는

- MPI_File_Seek; …Read() —> 개인 파일 포인터 이용

- MPI_File_Read_at; —> 해당 오프셋에서 직접적으로 읽기

- MPI_File_seek_shared; … read_shared() —> 공유 파일 포인터 이용. Seek_shared is collective.

- 읽기 쓰기로 버퍼, 카운트, 데이터 형식을 specify 한다 (보통 send/recev 처럼)

- MPI_File_close() is also collective.

MPI-2 and Beyond

- MPI-2 는 많은 기능을 넣었는데 1의 기능보다 훨씬 느려졌고, 해당 사용 회사들의 기능들은 오랫동안 미완성이었음

- MPI-3는 단방향 통신과 non-blocking collective 를 향상 시킴